On Saturdays, when the weather is not fit for the playground, I take my toddler to a tumble gym where he can run, climb, and kick balls around with other kids his age. Parents must accompany kids in the play area as this is a free-form play center without an employed staff (other than the front desk attendant). As a market researcher and a perpetual observer of the human condition, I’ve noticed that these parents fall into three distinct groups: the super-involved group, the middle-of-the-road group, and the barely-involved group.The super-involved parents take full control of their child’s playtime. They grab the ball and throw it to their kid. They build forts. They chase the kids around. They completely guide their child’s playtime by initiating all the activities. “Over here, Jimmy! Let’s build a ramp and climb up! Now let’s build a fort! Ooh, let’s grab that ball and kick it!”

On Saturdays, when the weather is not fit for the playground, I take my toddler to a tumble gym where he can run, climb, and kick balls around with other kids his age. Parents must accompany kids in the play area as this is a free-form play center without an employed staff (other than the front desk attendant). As a market researcher and a perpetual observer of the human condition, I’ve noticed that these parents fall into three distinct groups: the super-involved group, the middle-of-the-road group, and the barely-involved group.The super-involved parents take full control of their child’s playtime. They grab the ball and throw it to their kid. They build forts. They chase the kids around. They completely guide their child’s playtime by initiating all the activities. “Over here, Jimmy! Let’s build a ramp and climb up! Now let’s build a fort! Ooh, let’s grab that ball and kick it!”

The middle-of-the-road group lets the kids play on their own, but they also keep an eye out and intervene when needed. For example, a parent in this group would intervene if the child is looking dangerously unstable while climbing the fort, or if the child steals another kid’s ball and sparks a meltdown.

The barely-involved parents tend to lean against the wall and stay on their phones—probably checking Facebook. They don’t know where their kid is or what their kid is doing. For all they know, their child could be scaling a four foot wall and jumping onto another kid’s head.

This just demonstrates this simple fact: people are more the same than they are different. This is why I love segmentation studies—it’s fascinating that almost everyone can be grouped together based on similar behaviors.

At CMB, we strive to make our segmentation studies relevant, meaningful, and actionable. To this end, we have found the following five-point plan valuable for guiding our segmentation studies:

- Start with the End in Mind: Determine how the definition and understanding of segments will be used before you begin.

- Allow for Multiple Bases: Take a comprehensive, model-based approach that incorporates all potential bases.

- Have an Open Mind: Let the segments define themselves.

- Leverage Existing Resources: Harness the power of your internal databases.

- Create a Plan of Action: Focus on internal deployment from the start.

Because each segmentation study is different, using appropriate selection criteria ensures that segments can be acted upon. In the case of the tumble gym patrons, we might recommend that marketing efforts be based on a psychographic segmentation. What are the parenting philosophies? In what ways does this motivate the parents, and how can marketing efforts be targeted to the low-hanging fruit?

Incidentally, I find that I fall into the middle segment.

Jessica is a Data Manager at CMB and can’t help but mentally segment the population at large.

Want to learn more about segmentation? In the “The 5 C’s of Great Segmentation Socializers,” Brant Cruz shares 5 tips for making sure your segmentation is embraced and used in your organization.

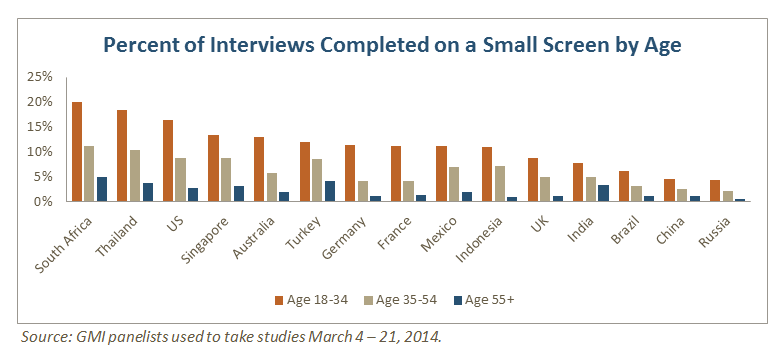

Webinar: Modularized Research Design for a Mobile World

Join us and Research Now to learn about the modularized traditional purchasing survey we created, which allows researchers to reach mobile shoppers en mass. We'll review sampling and weighting best practices and study design considerations as well as our “data-stitching” process.

The ubiquity of mobile devices has opened up new opportunities for market researchers on a global scale.

The ubiquity of mobile devices has opened up new opportunities for market researchers on a global scale.

I know what you are thinking...“What the heck is she TALKING about? How can

I know what you are thinking...“What the heck is she TALKING about? How can