Is it time for the “traditional” market researcher to join the ranks of the milkman and switchboard operator? The pressure to provide more actionable insights, more quickly, has never been so high. Add new competitors into the mix, and you have an industry feeling the pinch. At the same time, primary data collection has become substantially more difficult:

Is it time for the “traditional” market researcher to join the ranks of the milkman and switchboard operator? The pressure to provide more actionable insights, more quickly, has never been so high. Add new competitors into the mix, and you have an industry feeling the pinch. At the same time, primary data collection has become substantially more difficult:

- Response rates are decreasing as people become more and more inundated with email requests

- Many among the younger crowd don’t check their email frequently, favoring social media and texting

- Spam filters have become more effective, so potential respondents may not receive email invitations

- The cell-phone-only population is becoming the norm—calls are easily avoided using voicemail, caller ID, call-blocking, and privacy managers

- Traditional questionnaire methodologies don’t translate well to the mobile platform—it’s time to ditch large batteries of questions

It’s just harder to contact people and collect their opinions. The good news? There’s no shortage of researchable data. Quite the contrary, there’s more than ever. It’s just that market researchers are no longer the exclusive collectors—there’s a wealth of data collected internally by companies as well as an increase in new secondary passive data generated by mobile use and social media. We’ll also soon be awash in the Internet of Things, which means that everything with an on/off switch will increasingly be connected to one another (e.g., a wearable device can unlock your door and turn on the lights as you enter). The possibilities are endless, and all this activity will generate enormous amounts of behavioral data.

Yet, as tantalizing as these new forms of data are, they’re not without their own challenges. One such challenge? Barriers to access. Businesses may share data they collect with researchers, and social media is generally public domain, but what about data generated by mobile use and the Internet of Things? How can researchers get their hands on this aggregated information? And once acquired, how do you align dissimilar data for analysis? You can read about some of our cutting-edge research on mobile passive behavioral data here.

We also face challenges in striking the proper balance between sharing information and protecting personal privacy. However, people routinely trade personal information online when seeking product discounts and for the benefit of personalizing applications. So, how and what’s shared, in part, depends on what consumers gain. It’s reasonable to give up some privacy for meaningful rewards, right? There are now health insurance discounts based on shopping habits and information collected by health monitoring wearables. Auto insurance companies are already doing something similar in offering discounts based on devices that monitor driving behavior.

We are entering an era of real-time analysis capabilities. The kicker is that with real-time analysis comes the potential for real-time actionable insights to better serve our clients’ needs.

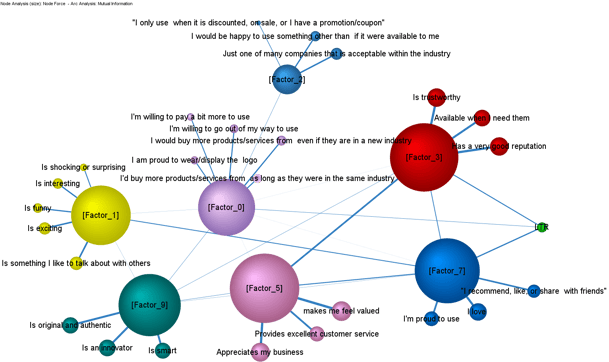

So, what’s today’s market researcher to do? Evolve. To avoid marginalization, market researchers need to continue to understand client issues and cultivate insights in regard to consumer behavior. To do so effectively in this new world, they need to embrace new and emerging analytical tools and effectively mine data from multiple disparate sources, bringing together the best of data science and knowledge curation to consult and partner with clients.

So, we can say goodbye to “traditional” market research? Yes, indeed. The market research landscape is constantly evolving, and the insights industry needs to evolve with it.

Matt Skobe is a Data Manager at CMB with keen interests in marketing research and mobile technology. When Matt reaches his screen time quota for the day he heads to Lynn Woods for gnarcore mountain biking.

As I wrote in

As I wrote in

The explosion of mobile web and mobile app usage presents enormous opportunities for consumer insights professionals to deepen their understanding of consumer behavior, particularly for “in the moment” findings and tracking consumers over time (when they aren’t actively participating in research. . .which is 99%+ of the time for most people). Insight nerds like us can’t ignore this burgeoning wealth of data—it is a potential goldmine. But, working with passive mobile behavioral data brings with it plenty of challenges, too. It looks, smells, and feels very different from self-reported survey data:

The explosion of mobile web and mobile app usage presents enormous opportunities for consumer insights professionals to deepen their understanding of consumer behavior, particularly for “in the moment” findings and tracking consumers over time (when they aren’t actively participating in research. . .which is 99%+ of the time for most people). Insight nerds like us can’t ignore this burgeoning wealth of data—it is a potential goldmine. But, working with passive mobile behavioral data brings with it plenty of challenges, too. It looks, smells, and feels very different from self-reported survey data: