Who are the mobile consumers?

Let’s get this straight: I am not addicted to my smartphone. Unlike so many of my fellow train commuters who stare zombie-eyed into their small screens, I am not immersed in a personal relationship with pixels. I have an e-Reader for that. But, my smartphone IS my lifeline.I’ve come to depend exclusively on my phone to keep me on-time and on-schedule, to entertain me (when not using my e-Reader), to stay in touch with family and friends, and to keep up-to-date with my work email. It’s my primary source for directions, weather, news, photography, messaging, banking, and a regular source for payment, shopping, and ticketing/reservations. I haven’t purchased a PC in nearly a decade, and I don’t have a landline. I also use my smartphone to take market research questionnaires, and I am far from alone.

Let’s get this straight: I am not addicted to my smartphone. Unlike so many of my fellow train commuters who stare zombie-eyed into their small screens, I am not immersed in a personal relationship with pixels. I have an e-Reader for that. But, my smartphone IS my lifeline.I’ve come to depend exclusively on my phone to keep me on-time and on-schedule, to entertain me (when not using my e-Reader), to stay in touch with family and friends, and to keep up-to-date with my work email. It’s my primary source for directions, weather, news, photography, messaging, banking, and a regular source for payment, shopping, and ticketing/reservations. I haven’t purchased a PC in nearly a decade, and I don’t have a landline. I also use my smartphone to take market research questionnaires, and I am far from alone.

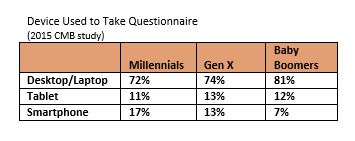

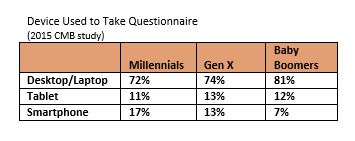

Data around smartphone usage aligns with my personal experience. In a recent CMB online study of U.S. consumers, optimized for mobile devices, 1 in 6 Millennials completed the questionnaire on a smartphone. Other studies report similar results. This example illustrates the issue with representativeness. Major panel vendors are seeing over half of Millennials joining their panels via a mobile device.

How do we adapt?

Much has been hypothesized about the future of market research under the new paradigm of mobile commerce, big data, and cloud services. New technologies and industry convergence (not just mobile) have brought sweeping changes in consumer behaviors, and market researchers must adapt.

A key component of successful adaptation will be greater integration of primary market research with other data streams. The promise of passive or observational data is captivating, but it is largely still in the formative stages. (For more on passive data, check out our recent webinar.) We still need and will likely always need active “please tell me” research. The shift from phone to online data collection has quickly been replaced with the urgency of a shift to mobile data collection (or at least device agnostic interviewing). Our industry has lagged behind because the consumer experience has become so personalized and the trust/value equation for tapping into their experiences is challenging. Tackling mobile market research with tactical solutions is a necessary step in this transition.

What should we do about it?

- Understand your current audience. Researchers need to determine how important mobile data collection is to the business decision and decide how to treat mobile respondents. You can have all respondents use a mobile device, have some use a mobile device, or have mobile device respondents excluded. There are criteria and considerations for each of these, and there are also considerations for the expected mix of feature phones, smartphones, tablets, and PCs. The audience will determine the source of sample and representation that must be factored into the study design. Ultimately, this has a huge impact on the validity and reliability of the data. Respondent invitations need to include any limitations for devices not suitable for a particular survey.

- Design for mobile. If mobile participation is important, researchers should use a mobile first questionnaire design. Mobile optimized or mobile friendly surveys typically need to be shorter in length, use concise language, avoid complex grids and answering mechanisms, and have fewer answer options, so they can be supported on a small screen and keep respondents focused on the activity. In some cases,questionnaire modularization or data stitching can be used to help adhere to mobile design standards.

- Test for mobile. All questions, images, etc. need to display on a variety of screen sizes and within the bandwidth capacity of the devices that are being used. Android and iOS device accommodation covers most users. If app based surveys are being used, researchers need to ensure that the latest versions can be downloaded and are bug-free.

- Apply data protection and privacy standards. Mobile market research comes with a unique set of conditions and challenges that impact how information is collected, protected, and secured. Research quality and ethical guidelines specific to mobile market research have been published by CASRO, ESOMAR, the MMRA (Mobile Marketing Research Association), and others.

- Implement Mobile Qualitative. The barriers are lower, and researchers can leverage the unique capabilities of mobile devices quite effectively with qualitative research. Most importantly, willing participants are mobile, which makes in-the-moment research possible. Mobile qualitative is also a great gateway to explore what’s possible for mobile quantitative studies. See my colleague Anne Hooper’s blog for more on the future of qualitative methodologies.

- Promote Research-on-Research. Experts need to conduct and publish additional research-on-research studies that advance understanding of how to treat mobile respondents and utilize passive data, location tracking, and other capabilities that mobile devices provide. We also need stronger evidence of what works and what doesn’t work in execution of multi-mode and mobile-only studies across different demographics, in B2B studies, and within different countries.

But perhaps the most important thing to remember is that this is just a start. Market researchers and other insight professionals must evolve from data providers to become integrated strategic partners—harnessing technology (not just mobile) to industry expertise to focus on decision-making, risk reduction, and growth.

Brian is a Senior Project Manager for Chadwick Martin Bailey, the photographer of the image in this post, and an 82 percenter—he is one of the 82% of mobile phone owners whose phone is with them always or most of the time.

Watch our recent webinar that discusses the results of our self-funded Consumer Pulse study on the future of the mobile wallet.

Dear Al,

Dear Al,

Let’s get this straight: I am not addicted to my smartphone. Unlike so many of my fellow train commuters who stare zombie-eyed into their small screens, I am not immersed in a personal relationship with pixels. I have an e-Reader for that. But, my smartphone IS my lifeline.I’ve come to depend exclusively on my phone to keep me on-time and on-schedule, to entertain me (when not using my e-Reader), to stay in touch with family and friends, and to keep up-to-date with my work email. It’s my primary source for directions, weather, news, photography, messaging, banking, and a regular source for payment, shopping, and ticketing/reservations. I haven’t purchased a PC in nearly a decade, and I don’t have a landline. I also use my smartphone to take market research questionnaires, and I am far from alone.

Let’s get this straight: I am not addicted to my smartphone. Unlike so many of my fellow train commuters who stare zombie-eyed into their small screens, I am not immersed in a personal relationship with pixels. I have an e-Reader for that. But, my smartphone IS my lifeline.I’ve come to depend exclusively on my phone to keep me on-time and on-schedule, to entertain me (when not using my e-Reader), to stay in touch with family and friends, and to keep up-to-date with my work email. It’s my primary source for directions, weather, news, photography, messaging, banking, and a regular source for payment, shopping, and ticketing/reservations. I haven’t purchased a PC in nearly a decade, and I don’t have a landline. I also use my smartphone to take market research questionnaires, and I am far from alone.

As I wrote in

As I wrote in

The explosion of mobile web and mobile app usage presents enormous opportunities for consumer insights professionals to deepen their understanding of consumer behavior, particularly for “in the moment” findings and tracking consumers over time (when they aren’t actively participating in research. . .which is 99%+ of the time for most people). Insight nerds like us can’t ignore this burgeoning wealth of data—it is a potential goldmine. But, working with passive mobile behavioral data brings with it plenty of challenges, too. It looks, smells, and feels very different from self-reported survey data:

The explosion of mobile web and mobile app usage presents enormous opportunities for consumer insights professionals to deepen their understanding of consumer behavior, particularly for “in the moment” findings and tracking consumers over time (when they aren’t actively participating in research. . .which is 99%+ of the time for most people). Insight nerds like us can’t ignore this burgeoning wealth of data—it is a potential goldmine. But, working with passive mobile behavioral data brings with it plenty of challenges, too. It looks, smells, and feels very different from self-reported survey data: